Databases

Further Reading: For a ridiculously great comprehensive overview of database design, read Designing Data-Intensive Applications by Martin Kleppmann

General Recommendations for Databases

- Use good connection management practices, such as connection pooling and exponential backoff, secure and isolate connections to your database

- Shard your database instances whenever possible

- Avoid very large transactions, but don’t send too many small ones – batch them!

- When migrating a database that requires a quick cutover, don’t get caught up in the batch movement, but also look for streaming the most recent data using a tool like Cloud Dataflow

- Look out for tricky questions like, “reimplementing” or “replacing” vs. finding equivalent services with a lift and shift. For example, a customer may be using a Hadoop or Spark server, but that doesn’t mean Cloud Dataproc is the best replacement, even if it’s simpler. If the goal is to reimplement a new system, be open to new tools suited for the job.

Relational

Cloud SQL

- Fixed Schema, fully-managed and reliable MySQL, PostgreSQL, or SQL Server

- If you need specific SQL Server capabilities, like SSRS, run the services on a GCE VM and connect to the Cloud SQL instance

- Also, run the whole stack on your own VMs if you need license portability or pre-configured images for SQL Server

- Supports automatic replication, backup, and failover

- Use the new serverless Database Migration Service or restore your database from a backup to migrate to Cloud SQL

- Up to 64 TBs of storage, 40,000 IOPS, 416 GB of RAM, 4000 concurrent connections

- Best Practice: It’s better to create multiple instances with fewer database tables rather than giant cloud SQL instances

- Scaling compute resources for Cloud SQL is manual, but storage can be automated using read replicas with semisynchronous replication to scale out

- If you enable automated storage increases, Cloud SQL checks your available storage every 30 seconds. If the available storage falls below a threshold size, Cloud SQL automatically adds additional storage capacity. If the available storage repeatedly falls below the threshold size, Cloud SQL continues to add storage until it reaches the maximum of 64 TB.

- The automatic storage increase setting of a primary instance automatically applies to any read replicas of that instance. The automatic storage increase setting cannot be independently set for read replicas.

- Best for transactional workloads (OLTP), not analytics (OLAP)

- Regional Service, it can span zones but not regions

- Not great for low-latency retrieval of time-series data

- Good for web frameworks (ecommerce) and content management systems (CMS)

- Read replicas improve performance, failover replicas are used for high availability

- HA Overview: https://cloud.google.com/sql/docs/sqlserver/high-availability

- HA is provided using a regional persistent disk

- Use gcloud sql instances create –availability-type=REGIONAL or

- gcloud sql instances patch [INSTANCE_NAME] –availability-type REGIONAL

- Read Replicas: https://cloud.google.com/sql/docs/mysql/replication/cross-region-replicas

- Read replicas are typically used for regional migrations, improved performance, or DR scenarios

Cloud SQL Recommendations

- Use Cloud SQL Auth Proxy whenever possible for added security

- Additionally, use private IP networking (VPC) and Cloud SQL proxy without a public IP address for additional security, limit administrative privileges

- If you need public IP networking, use the built-in firewall with a narrow IP list and require SSL, if possible, use a Cloud SQL Proxy and restrict to authorized networks

Cloud Spanner

- Fixed Schema, first horizontally scalable, strongly consistent, relational database service

- Combines the benefits of relational database structure with non-relational horizontal scale

- Regional, Multi-Regional, or Global service

- Good for when you’ve outgrown Cloud SQL

- Supports stale reads and timestamp bounds

- Can scale from 1 to hundreds or thousands of nodes. A minimum of 3 nodes is recommended for prod, and a node is actually 3 instances (replicas) under the hood

- Scales to petabytes, built-in high availability

- Use for financial and inventory applications, gaming (e.g. Pokemon Go), global meta-data, RDBMS+ at scale, transactions, ad/fin/martech

- Fully managed ACID relational database. No planned downtime for anything

- There is also a PostgreSQL interface for Cloud Spanner generally available as of July 2022

- Multi-regional is 5 9’s, regional is 4 9’s.

AlloyDB for PostgreSQL

- Fully managed PostgreSQL-compatible database for enterprise workloads

- Great migration target for Oracle databases

- 100x faster than standard PostgreSQL databases for analytics queries

- Built-in Vertex AI (Machine Learning) capabilities

- AllowDB Omni can be downloaded installed locally or on-prem to run this anywhere

- Storage is fully managed, only charged for what you use – and no additional storage cost for read replicas

- Root resource of a PostgreSQL deployment in AlloyDB is a cluster that contains a single primary instance for reading and writing, and 0 or more read pool instances for read access. Can scale the nodes in each read pool on an as-needed basis

- 99.99% (4 9’s) SLA

If you don’t need full relational capabilities, consider a NoSQL service!

Non-Relational or NoSQL (Not Only SQL!)

Cloud Bigtable

- NoSQL, Zonal, Schemaless, Key Value Store with eventual consistency

- Analytical and Operational Workloads such as Time Series, Marketing, Financial Data, AdTech, IoT streaming

- Scale to Terabytes or Petabytes

- Ideal for MapReduce

- Supports open-source HBase API

- Integrates with Hadoop, Dataflow, Dataproc

- Scales seamlessly and unlimitedly, storage autoscales

- Processing nodes must be scaled manually and you pay per node hour

- High throughput, heavy read and write events

- Cost-efficient, highly available, and low-latency. It scales well. Best of all, it is a managed service that does not require significant operations work to keep running.

- Real-time analytics like personalization and recommendations, used for Spotify’s Discover Weekly

Best Practices (More here)

- Design row key with the most common query in mind, don’t hash your row key

- Design row key to minimize hot spots, don’t start with a timestamp or use sequential numbers

- Tables should be tall and narrow, store changes as new rows

- Use short column names, organize into column families, designed for sparse tables

- This is a no-ops solution, it auto-balances, replicates, compacts, etc.

Cloud Datastore

- NoSQL, Schemaless, hierarchical, mobile, web. Think JSON document data from web apps

- Good for user profiles, product catalogs, or storing game state

- Managed and autoscaled NoSQL database with indexes, queries, and ACID transaction support

- Regional and multi-regional service

- No joins or aggregates, must line up with indexes

- Automatic built-in indexes for simple filtering and sorting, and you can create manual indexes

- Pay for storage and IO operations

- Transactions

Best Practices

- Use batch operations for reads, writes, and deletes instead of single operations

- If a transaction fails, try to rollback the transaction

- Use asynchronous calls where available

- Don’t update a single entity too rapidly (more than once per second)

- Avoid high read or write rates to lexicographically close documents (hotspotting)

- Gradually ramp up traffic to new kinds or portions of the keyspace

- Avoid deleting large numbers of entities across a small range of keys

- Use sharding (break up an entity into smaller pieces) or replication (storing N copies of the same entity) to deal with hotspots

Cloud Firestore

- Firestore is the next version of Datastore

- Two modes: native or Datastore mode for backward compatibility

- Multi-Regional, Serverless, Pay for what you use

- Highest net promoter score, very easy to start with

- Good for user profiles, user messaging, product catalogs, game state, offline/edge data

- NoSQL document database with real-time client updates via managed websockets

- Contains collections, documents, and contained data

- Pay for operations and much less for storage and transfer

- Simplifies storing, syncing, and querying data

- Mobile, web, and IoT apps at a global scale

- Live synchronization and offline support

- Security features

- ACID transactions

- Multi-region replication

- Powerful query engine

Cloud Memorystore

- Fully managed Redis or Memcached

- Good for caching web/mobile app data like user sessions or game state, documented as our best practice for leaderboards (hint hint, case study!)

- Up to 300 GB instances for Redis, 5TB for Memcached

- Redis provides 12 Gbps throughput, sub-millisecond latency

- Easy to lift and shift

- Schemaless

Firebase Realtime Database

- Not to be confused with the wider Firebase platform, which exists to accelerate app development with fully managed backend infrastructure

- Consider using Firestore instead

- Single, potentially huge JSON doc located in central US

- Pay more for GB/month stored and GB downloaded

- NoSQL

Data & Analytics Tools

Cloud Dataflow

- Smartly-autoscaled fully managed batch or stream MapReduce-like processing

- General purpose tool

- Released as open-source Apache Beam project

- Autoscales and dynamically redistributes lagging work to optimize run time

- Serverless, fast, cost-effective service for streaming and batch processing

- Read the data from a source into P (parallel) collection, Transform it into a new P collection, Write final P Collection to a sink

- Use Apache Beam SDK open source libraries (Java, Python) – requires familiarity with scripting languages

- Automates infrastructure and cluster management, dynamically scales up and down

- Dataflows are deployed using jobs, can customize the Compute Engine workers and can autoscale. Separates compute from storage

- Start with templates, or write a SQL statement, or use an AI Notebook. Pre-built templates with custom options, these custom templates can then be shared.

- An example is from Cloud Storage or Pub/Sub to BigQuery.

- Can monitor job metrics at step and worker level.

- If you’re building a new data processing pipeline from scratch

- Can also process images (like photos, not VM images)

- Fully managed no-ops, Google manages rebalancing underlying compute cluster

Cloud Dataprep

- Visually explore, clean, and prepare data for analysis without running servers

- Data Wrangling tool for business analysts, not IT pros

- For people who might otherwise spend their time cleaning data

- Managed by Trifacta Wrangler

- You pay for the underlying Dataflow job and management overflow

- Another no-ops, fully-managed service

- UI-Driven data preparation

- Scales on-demand

- Not automated, ongoing, more of a one-time setup

- Under the hood, it creates a Beam pipeline and runs it on Dataflow

Cloud Dataproc

- Batch MapReduce processing via configurable, managed Spark and Hadoop clusters.

- Can add or remove nodes, even while running jobs

- Pay for underlying compute servers, can use preemptible for all but one node

- Should go with Cloud Dataflow if building new, not migrating

- Crosses over between infrastructure and workflows

- Big data batch processing ETL/ML

- Out of the box support for popular open source software, move on prem OSS clusters to the cloud, Hadoop/Spark/Presto/Flink

- Scale up and down, even while jobs are running

- Use if you have existing Hadoop/Spark Applications

- Take advantage of Machine Learning/Data Science ecosystem

- Just for batch processing, not great at streaming – use Dataflow for streaming

- Tunable cluster parameters, the user is responsible for making sure the machines act appropriately for the data

- Best Practice: Use the Jobs API to scale Dataproc clusters, which reduces costs by running jobs in existing clusters

Cloud Composer

- Based on Apache Airflow for Workflow Orchestration

- Directed Acyclic Graphs (DAGs) are written in Python, stored in dag_folder

- Each task in a DAG can represent almost anything, like preparing data for ingestion, monitoring an API, sending an API, or running a pipeline

- Concerned with the instructions necessary to complete each step

- Computational workflows, data processing pipelines, dependency management, extensible operators to REST APIs.

- Open Source platform → supports hybrid and multi-cloud

- Related Blog Post

- Choosing the right orchestrator in Google Cloud

Data Catalog

- Fully managed and scalable metadata management service that organizations can use to quickly discover, manage, and understand all their data in Google Cloud

- Offers a simple and easy-to-use search interface for data discovery, a flexible and powerful cataloging system for capturing both technical and business metadata, and a strong security and compliance foundation with Cloud Data Loss Prevention (DLP) and Identity and Access Management integrations.

- Can add Structured Tags for business metadata to tables and columns within tables

Cloud Data Fusion

- Fully managed, cloud-native data integration service that helps users efficiently build and manage ETL/ELT data pipelines

- Graphical interface and a broad open source library of preconfigured connectors and transformations, Cloud Data Fusion shifts an organization’s focus away from code and integration to insights and action.

- Under the hood, it creates a Spark pipeline and runs it on a Dataproc cluster

Data Warehousing

BigQuery

Overview

- Fixed Schema Enterprise Data Warehouse. Not really a database, more of a warehouse to hand off to analysts.

- Normally don’t write apps on top of BQ because of the minimum several second latency

- Multi-regional serverless column-store data warehouse for analytics using SQL

- Focus on analytics instead of managing infrastructure.

- Storage – in a structured table that uses standard SQL, Ingest from Cloud Storage, Cloud Data Flow, and more formats, Query with SQL

- Serverless database, scales internally

- Pay for the GBs that BigQuery “considers” or “scans” during queries. If you make the same queries, cached results are free to return (for 24 hours)

- Data is cheap to store and gets cheaper over time

- “Streaming Inserts” are paid per GB

- Projects contain datasets, datasets contain tables

- Like Azure Synapse

- Tabular data

- Can query external data sources (using federation). External sources include other BQ instances, GCS, and Google Drive. Used when data is frequently changing and no desire to reload it. The downside is query performance will be impacted

- Job/Query History: view number of BQ jobs per person + details. Can be viewed via Web Console or Cloud Shell (bq ls ….)

Best Practices

- Avoid SELECT * – only query the columns that you need, sample data using preview options (which lets you view data for free without affecting quotas)

- Price your queries before running them, use the pricing calculator or a dry-run flag, the query validator will show how much data will be used

- Set a maximum bytes billed value to eliminate large queries from running

- Use clustering and partitioning to reduce the amount of data scanned (saves money…)

- For example, partition data by date and cluster by keyword values. There is a PARTITION BY command. It automatically knows what partition ranges to use.

- Use Clusters for something like tags. Stores alike data closer together, you can cluster on multiple columns. CLUSTER BY command. You can have one clustering key per table

- Set up clustering and partitioning in the beginning if possible

- You can require partitioning on queries to avoid expensive queries

- Break your query into stages by writing results to a destination table

- Using a LIMIT clause does not affect the amount of data that is read, it will still read everything

- Create a dashboard to view billing data, stream audit logs to analyze usage patterns

- There is no charge for loading data into BigQuery, but there is a charge for STREAMING data into it. Unless it needs to be available immediately, load it instead of streaming it.

- BigQuery has long-term storage pricing that kicks in when it is not edited after 90 days

- Use expiration settings to remove unneeded tables and partitions, good for experimentation or if you only need data for a limited amount of time

- Leverage Policy Tags to define access to your data which provides column-level security. This is good for classifying data by high/med/low – test this in monitor mode

- In BigQuery IAM roles, jobUser gets billed, dataViewer does not

- Authorized Views let you share query results with particular users/groups without giving them access to the underlying source data

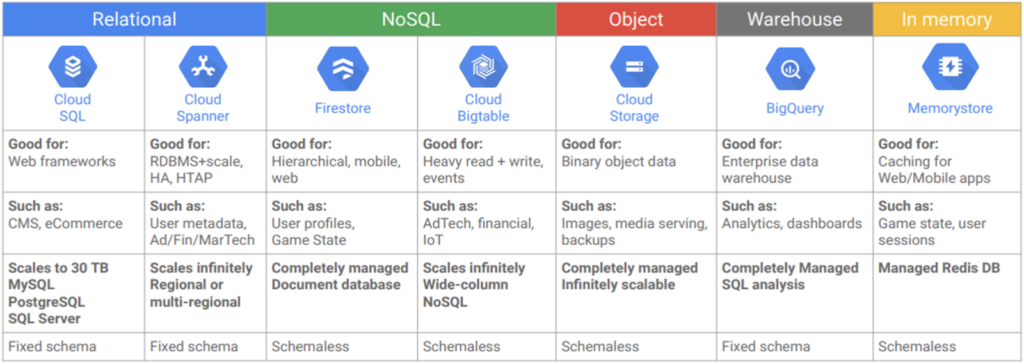

Database Selection Diagram

Additional Database Notes

- Lots of managed partner solutions on the marketplace and self managed options on Bare Metal Solution. Bare Metal Solution will be on purpose built hardware, low latency, and BYO DBAs or integration partners

- Fully self-managed databases can go on GCE or GKE

- Managed Wide-Column, Apache HBASE will always be Bigtable

- Cloud Native Databases are:

- Firestore

- Fast, fully managed serverless

- Real time at global scale

- Document Database Modernization (mongoDB and Couchbase)

- Scales DOWN well

- Bigtable

- Scales UP well

- Key-Value wide-column focusing on low latency, scalability, and reliability

- HBase API compatible

- Cloud Spanner

- OLTP and relational database services combined with horizontal scalability

- Good for OLTP/SQL Database Modernization

- Firestore